We previously explored: “GEO is the new SEO?”, now it’s time to go deeper technically (not so deep) and understand what’s behind Search Algorithms and also LLMs. We can think that GEO is the combination of SEO and LLMs, but we might be wrong on this topic, and it’s for that we need to explain both to better understand the difference.

Disclaimer: if you want an article that is focusing on LLM only, you can read this great article or watch this great video. If you want an article that is focusing on Search Algorithms only, you can read this great paper. Here we’ll try to keep it simple.

Search Algorithms

If you read the previous article, you will see that Google has a monopoly on search and search algorithms and obviously we will speak about them. Unfortunately, we will not be discussing Archie, WebCrawler, Venice, Pigeon, I don’t want to make it too long… Let’s break it down!

1996, PageRank

Created by Sergey Brin and Larry Page, PageRank became the foundation of Google and Search Algorithms. It ranked pages based on links and authority, using the idea that a page is more important if many other important pages link to it.

The model works like a random internet user clicking through links, where each link passes some of its "reputation" to the next page. The more high-quality pages link to you, the higher your PageRank. This approach helped Google deliver better results than its competitors, but it was quickly exploited by tactics like link farming and paid links, which led to later algorithm updates to refine and protect search quality.

2003, Florida

Google’s Florida update sent shockwaves through SEO, I know you remember this market thunder (I was 1yo). It was the first major algorithm update that aggressively targeted manipulative tactics, especially spammy link schemes. Many innocent sites were caught in the crossfire, losing rankings overnight, just before the holiday shopping season. Florida marked the start of Google using statistical link analysis to detect unnatural linking patterns. It forced SEO to evolve, shifting focus from pure keyword tricks to more “natural” looking links. The effects of Florida still echo today in how SEO pros think about links and penalties.

2011, Panda

Google Panda marked a major shift in how Google ranks content. Its goal was simple: stop low-quality content from dominating search results. Before Panda, content farms filled the web with thin, duplicated, or spammy articles just to rank. Panda changed that by prioritizing useful, original, and well-structured pages. Sites with weak, repetitive, or ad-heavy content lost rankings fast. While Panda now operates as part of Google’s core algorithm, its core message remains clear: quality beats quantity, and SEO without substance won’t last.

2012, Penguin

Google Penguin was designed to fight link manipulation. Its focus was clear: penalize websites using spammy or unnatural backlinks to boost their rankings again. Before Penguin, many sites climbed to the top by buying links or participating in shady link schemes. Penguin changed the game by devaluing these tactics and rewarding sites with clean, organic link profiles. It also pushed SEOs to focus on earning links naturally, through valuable content. Today, Penguin operates in real time as part of Google's core algorithm, making link quality more crucial than ever.

2013, Hummingbird

A major shift toward natural language processing and conversational queries. Google began understanding search intent beyond simple keywords.

Hummingbird was a major shift in Google’s search algorithm, marking a transition from keyword-based matching to semantic search and intent understanding. Unlike previous updates like Panda or Penguin, which targeted specific ranking factors, Hummingbird completely rewrote the core search infrastructure to handle complex, conversational queries more effectively. One of its key innovations was leveraging Google’s Knowledge Graph, a vast database of entities and their relationships, to interpret the meaning behind queries instead of merely matching words. By combining the Knowledge Graph with advanced techniques like query parsing and synonym substitution (as shown in the patent diagram with the “Chicago Style Pizza” example), Hummingbird could rephrase queries in a way that reflects user intent.

For instance, in the query “What is the best place for Chicago Style Pizza?”, Hummingbird identifies “place” as potentially ambiguous and substitutes it with “restaurant” based on co-occurrence data, Knowledge Graph associations, and context within the query. This process enables Google to provide more accurate and meaningful results, particularly for voice searches and longer, conversational queries. It also paved the way for later developments like RankBrain and BERT.

2015, RankBrain

It’s the first time Google integrate machine learning into search, if I'm not mistaken. As part of the Hummingbird algorithm, RankBrain helps Google better understand search queries by mapping them into "word vectors" allowing it to guess meanings of unfamiliar phrases and improve results for ambiguous or new searches.

It adapts search results based on user behavior, making the system more responsive to intent and linguistic nuances such as regional differences, for example, distinguishing between “boot” as footwear in the U.S. and as car storage in the UK.

2019, BERT

BERT (Bidirectional Encoder Representations from Transformers) brought a major leap forward in search understanding. Built on deep learning and Transformer architecture, BERT is designed to capture context from both sides of a word simultaneously, left and right, through its innovative "masked language model" approach. Unlike prior models that read text in only one direction, BERT enables Google to better understand subtle nuances in queries, especially for long, conversational searches and complex, natural phrasing. BERT was pre-trained on massive datasets like Wikipedia and BooksCorpus, allowing it to handle tasks such as question answering, natural language inference, and sentence classification with remarkable accuracy.

BERT means it’s more important than ever to create content that answers questions clearly and naturally, rather than just focusing on keywords.

2023, Gemini

Gemini can understand complex questions, perform step by step reasoning, and generate content. It combines advanced reasoning, planning, and the ability to process text, images, and video together. Gemini powers features like AI Overviews, AI organized results, and built in planning tools.

For SEO, Gemini pushes search toward a more conversational and context focused experience. Search has evolved from showing results to becoming a personal assistant for research and discovery.

Gemini marks a shift. This is where search fully steps into the era of large language models.

LLMs

Well, this part is more difficult to explain, but let’s try to understand the basics and link them directly to Generative AI. You can take a break now and watch this video (highly recommended):

Great to have you back! LLMs are a small part of the wider field of artificial intelligence:

Here’s how LLMs work (simplified):

Training on massive text data: LLMs are trained by reading huge amounts of text from books, websites, forums, and more.

Learning patterns: The model learns the patterns of how words, phrases, and sentences are typically written and connected.

Predicting the next word: The core of an LLM is to predict what comes next in a text. For example, if you write “The sky is”, the model predicts the next word like “blue.”

Generating text: By predicting one word at a time and repeating the process, LLMs can generate entire sentences, paragraphs, or pages of text, this is called Generative AI. To better understand how it’s done:

LLMs don't simply repeat what they have seen before. They recombine ideas and patterns to generate new content, that's why they're called generative. They can answer complex questions, write essays and stories, and even code.

Answer

As we’ve seen, to deliver the best possible answers, LLMs need one essential ingredient: data. Without it, they’re powerless. Data is their fuel, no data, no answers, no predictions, no magic. They’ve been getting this fuel by scraping and collecting vast amounts of text from the internet, articles, books, forums, your conversations with them, everything. They rely on a mix of training data and external indexes to fetch information (since a few months).

Even though these models are trained on huge, high-quality datasets, that data can quickly become outdated. Most LLMs only “know” what they were trained on, meaning their knowledge might be weeks, months, or even years old. Ask an offline LLM today, “Who’s the current pope? Do not use the web.” (Thanks Francisco, you’re a beast, love this example).

The accuracy of the answer is dependent on the data that is currently being used, so it may or may not be correct. What if there has been a recent change? The model won’t know.

That’s why we now see Generative AI tools that connect to the web. These hybrid models mix trained knowledge with real-time browsing, giving fresher answers. However, even then, they only access parts of the web, not everything.

LLMs are brilliant, but they are what they eat, and what they eat is data. Without fresh data, they risk being outdated, no matter how smart they seem. Hopefully humans are more than just what they eat, but just imagine.

About GEO

As we’ve seen, in generative search, data is the real currency. The more accessible, structured, and high-quality your data is, the more likely it is to be cited in an AI-generated answer. But it’s not just about having data, it’s about how that data is structured and presented.

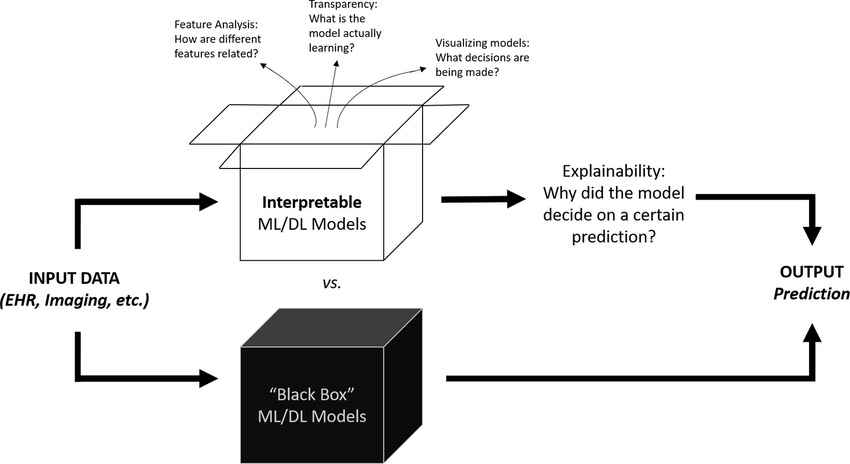

Generative Engine Optimization (GEO) operates inside a black box.

AI models decide what to include based on complex internal “reasoning”, invisible to outsiders (No Explainability). You can’t directly control these models or see exactly how the content is processed. And some people are trying to uncover and influence this black box:

“You need to make your content more "generative-friendly." This involves including specific types of information that generative models seems to favor. Statistical data, clear citations, and direct quotations from reputable sources tend to get more attention. Content that is written in a simple, fluent, and structured manner also tends to be more usable by these models.“

Fundamentally, GEO is about making your data more attractive and easier to use for AI models. In this system, your ability to be included in answers depends on how well your content fits into the model’s understanding of relevance, reliability, and readability. For example, OpenAI’s SearchGPT relies on Bing’s index to retrieve live data. That means the underlying model can only provide answers that are either already part of its training or accessible via Bing’s indexed sources. If your site isn’t indexed by Bing, you’re invisible, not just on Bing, but also inside ChatGPT’s answers. The real questions are: Are you part of the data? Are you well indexed on Bing? We'll figure it out tomorrow (but also below).

Analysis

A recent analysis of 40,000 AI answers and 250,000 citations from ChatGPT, Perplexity, and Gemini shows how AI decides which sites to mention, and the patterns are clear. The question you can ask yourself on a Sunday night: “is there a correlation of LLM Mentions by website category?”:

So, Yes. And here is what we found:

AI tools still favor trusted sources.

Third-party articles, news, and reviews still drive most mentions, but user-generated content, like Reddit threads, GitHub discussions, and YouTube videos, is growing fast in AI results.

AI also adapts citations based on where users are in their buying journey. Early-stage queries lean on blogs, reviews, and press articles. Mid-stage searches pull in more user experiences from forums and review sites. When users are close to making a decision, AI favors direct product pages and competitor sites for specific details.

Large brands naturally dominate at the decision stage, AI engines tend to cite them more often, likely because of their broad digital footprint and strong name recognition.

While Perplexity and Gemini both include around six citations per answer, pulling from a wide range of sources, ChatGPT remains far more selective, averaging about 2.6 citations per answer (it’s changing).

Each AI engine also favors different user-generated content platforms. Perplexity leans on YouTube and PeerSpot. Gemini taps into Medium, Reddit, and YouTube. ChatGPT often cites sources like G2, Gartner Peer Reviews, and LinkedIn.

We’re already seeing AI citation choices shift web traffic. ChatGPT often drives users to educational, technical, and research sites, reflecting its younger, student-heavy audience, unlike traditional search engines that favor commercial or entertainment content.

These are assumptions, hypotheses and thoughts. I want to remind you that we don't really know. We suppose and try things, and we find things, but things can change quickly. Do we really understand it?

Conclusion

GEO isn’t just the next evolution of SEO, it’s a different game entirely. It doesn’t reward keywords or backlinks, but structured, credible, and retrievable content. New game, new rules, new tips, new ecosystems, new knowledge, new people.

Let’s find out some recommendations for you tomorrow.